Trainable Models

Overview

The ‘Trainable Model’ concept utilizing a novel similarity based analysis methodology allows the user to:

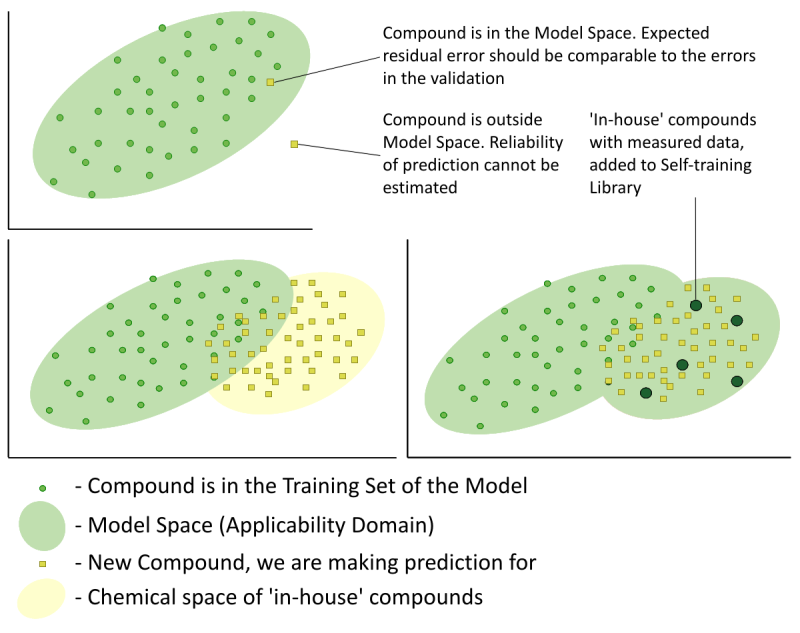

- Assess the quality of the predictions by means of the Reliability Index (RI) estimation. This index provides values in a range from 0 to 1 and serves as an evaluation of whether a submitted compound falls within the Model Applicability Domain. Estimation of the Reliability Index takes into account the following two aspects: similarity of the tested compound to the training set and the consistency of experimental values for similar compounds.

- Instantly expand the Model Applicability Domain with the help of any user-defined proprietary ‘in-house’ data of experimental values for the property of interest.

Each ‘Trainable Model’ consists of the following parts:

- A structure based QSAR/QSPR for the prediction of the property of interest derived from a literature training set – the baseline QSAR/QSPR.

- A user defined data set with experimental values for the property of interest – the Self-training

Library.

- A special similarity based routine which identifies the most similar compounds contained in the Self-training Library and considering their experimental values calculates systematic deviations produced by the baseline QSAR/QSPR for each submitted molecule – the training engine.

The current version of ACD/Percepta has implemented ‘Trainable Model’ methodology for the prediction of the following properties:

- P-gp Specificity

- Trainable P-gpS

Calculates the probability of a compound being a P-gp substrate. - Trainable P-gpI

Predicts the probability for a compound to act as a P-gp inhibitor.

- Trainable P-gpS

- Solubility

- Trainable LogSw

Calculates quantitative solubility in pure water (LogSw, mmol/ml). - Trainable LogS

Calculates quantitative solubility in buffer at selected pH values (LogS, mmol/ml at pH=1.7, 6.5 and 7.4). - Trainable Qual.S

Estimates probabilities for the solubility of the compound in buffer (S, mg/ml at pH=7.4) to exceed selected thresholds (0.1, 1 and 10 mg/ml).

- Trainable LogSw

- Plasma Protein Binding

- Trainable LogKa

Predicts the compound's equilibrium binding constant to human serum albumin in the blood plasma (LogKaHSA). - Trainable PPB

Estimates the fraction of the compound bound to the blood plasma proteins (%PPB)

- Trainable LogKa

- Partitioning

- Trainable LogP

Calculates the logarithm of the otanol-water partitioning coefficient for the neutral form of the compound (LogP) - Trainable LogD

Predicts the logarithm of the apparent octanol water partition coefficient at selected pH values (LogD at pH=1.7, 6.5 and 7.4) taking into account all the species (including ionized) of the compound present in the system.

- Trainable LogP

- Ionization constants

- Trainable pKa Full

Calculates pKa constants for all ionization stages

- Trainable pKa Full

- Cytochrome P450 Inhibitor Specificity

Calculates probability of a compound being an inhibitor of a particular cytochrome P450 enzyme with IC50 below one of the two selected thresholds (general inhibition models - IC50 < 50 μM; efficient inhibition - IC50 < 10 μM). Predictions are available for five P450 isoforms :- Trainable CYP1A2 I

- Trainable CYP2C19 I

- Trainable CYP2C9 I

- Trainable CYP2D6 I

- Trainable CYP3A4 I

- Cytochrome P450 Substrate Specificity Calculates probability of a compound being metabolized by a particular cytochrome P450 enzyme. Predictions are available for five P450 isoforms:

- Trainable CYP1A2 S

- Trainable CYP2C19 S

- Trainable CYP2C9 S

- Trainable CYP2D6 S

- Trainable CYP3A4 S

Note: Except for the Trainable pKa Full and pH dependent Trainable LogD and Trainable LogS, predictions of all other ‘Trainable Models’ (including Reliability Index estimations) are available in the corresponding ordinary ACD/Percepta modules. These predictions are based on Built-in Self-training Libraries and the only differences are in the lack of possibility for the user to add compounds to the models and in the way the results are presented.

Built-in Self-training Libraries

As a starting point for the calculations a number of Built-in Self-training Libraries with experimental values of the corresponding properties is provided for each ‘Trainable Model’ in ACD/Percepta:

- Trainable P-gpS

- Built-in P-gpS Self-training Library - 1596 compounds.

- Trainable P-gpI

- Built-in P-gpI Self-training Library - 2006 compounds.

- Trainable LogSw

- Built-in LogSw Self-training Library - 6807 compounds.

- Trainable LogS

- Built-in LogS(pH=1.7) Self-training Library - 6807 compounds.

- Built-in LogS(pH=6.5) Self-training Library - 6807 compounds.

- Built-in LogS(pH=7.4) Self-training Library - 6807 compounds.

- Trainable Qual.S

- Built-in Qualitative Solubility (S(7.4) > 0.1 mg/ml) Self-training Library - 7587 compounds.

- Built-in Qualitative Solubility (S(7.4) > 1 mg/ml) Self-training Library - 8163 compounds.

- Built-in Qualitative Solubility (S(7.4) > 10 mg/ml) Self-training Library - 7973 compounds.

- Trainable LogKa

- Built-in LogKa(HSA) Self-training Library - 334 compounds.

- Trainable PPB

- Built-in %PPB Self-training Library - 1453 compounds.

- Trainable LogP

- Built-in LogP Self-training Library - 16277 compounds.

- Trainable LogD

- Built-in LogD(pH=1.7) Self-training Library - 16277 compounds.

- Built-in LogD(pH=6.5) Self-training Library - 16277 compounds.

- Built-in LogD(pH=7.4) Self-training Library - 16321 compounds.

- Trainable pKa Full

- Built-in pKa Self-training Library - 20264 entries.

- Trainable CYP1A2 I

- Built-in CYP1A2 Inhibition (IC50 < 10 uM) Self-training Library - 5815 compounds.

- Built-in CYP1A2 Inhibition (IC50 < 50 uM) Self-training Library - 4867 compounds.

- Trainable CYP2C19 I

- Built-in CY2C19 Inhibition (IC50 < 10 uM) Self-training Library - 6833 compounds.

- Built-in CYP2C19 Inhibition (IC50 < 50 uM) Self-training Library - 6899 compounds.

- Trainable CYP2C9 I

- Built-in CY2C9 Inhibition (IC50 < 10 uM) Self-training Library - 7677 compounds.

- Built-in CYP2C9 Inhibition (IC50 < 50 uM) Self-training Library - 7666 compounds.

- Trainable CYP2D6 I

- Built-in CY2D6 Inhibition (IC50 < 10 uM) Self-training Library - 7507 compounds.

- Built-in CYP2D6 Inhibition (IC50 < 50 uM) Self-training Library - 7707 compounds.

- Trainable CYP3A4 I

- Built-in CY3A4 Inhibition (IC50 < 10 uM) Self-training Library - 7927 compounds.

- Built-in CYP3A4 Inhibition (IC50 < 50 uM) Self-training Library - 6684 compounds.

- Trainable CYP1A2 S

- Built-in CYP1A2 Substrates Self-training Library - 935 compounds.

- Trainable CYP2C19 S

- Built-in CYP2C19 Substrates Self-training Library - 794 compounds.

- Trainable CYP2C9 S

- Built-in CYP2C9 Substrates Self-training Library - 867 compounds.

- Trainable CYP2D6 S

- Built-in CYP2D6 Substrates Self-training Library - 1001 compounds.

- Trainable CYP3A4 S

- Built-in CYP1A2 Substrates Self-training Library - 960 compounds.

Note: The size of Built-in pKa Self-training Library is given not as a number of compounds, but rather as a total number of entries, since experimental data for several ionogenic centers in the same molecule may be present in the library.

Each library comes in two identical copies – ‘Read-only’ and ‘Editable’. The user is free to edit the contents of the ‘Editable’ version while no alterations are allowed to the ‘Read-only’ library which can be considered as a backup copy of the original data. Otherwise these Built-in Self-training Libraries have the same functionality – both can be used in calculations or as an initial data source for the creation of user-defined Self-training Libraries.